Kubernetes vs Docker: This is the phrase you hear more and more these days with the rise of the containerization technology. Today, more enterprises are restructuring their applications by deploying to the cloud. And to deal with the production-critical container issues, the orchestration tools enter the picture and become an indispensable part of enterprises’ container management.

In this article, we are going to discuss this compelling concept and compare the two hot cloud computing technologies: Kubernetes vs Docker. These are the popular and leading players in the container orchestration world. But before that, let’s get a brief introduction about them.

What is Kubernetes?

Kubernetes (commonly written as k8s) is an open-source container-orchestration platform used for the automation of application deployment, scaling, and management. Originally, it was designed by Google and is now maintained by an open-source foundation, Cloud Native Computing Foundation (CNCF). It is used for running and coordinating applications across a cluster of machines with a range of container tools including Docker. Basically, this enterprise-grade platform is designed to completely manage the life cycle of containerized services and applications using methods which offer high degrees of flexibility, scalability, and power.

What is Docker?

Docker is a containerization technology that packs your applications and other dependencies into lightweight and portable containers to ensure your application works smoothly in any environment. Launched in 2013, it is an open-source framework which has made the technology popular and driven the trend towards containerization in software development, now known as cloud-native development. Docker serves as a major building block to create microservices architectures, deploy the code with standardized continuous integration, and build highly-scalable platforms for developers.

Kubernetes Features

- Storage Orchestration – Kubernetes allows you to mount a storage system of your own choice to run applications i.e. local storage, public cloud provider, or shared network storage system.

- Horizontal Auto Scaling – With Kubernetes, you can not only scale resources vertically but also horizontally with a simple command or Kubernetes UI.

- Automatic Rollbacks and Rollouts – Kubernetes steadily roll out any changes made to your application, ensuring that it doesn’t destroy all your instances together. And if anything goes wrong within the application or its configuration, Kubernetes will roll back the changes.

- Service Discovery and Load Balancing – There is no need to modify the application to use a new service discovery system when using Kubernetes: it assigns IP addresses to Pods and a single DNS name for a group of Pods, and is also capable of doing load-balance across them.

- Health Check Capability – Kubernetes constantly checks the health of the app and if an app fails to respond, for example when it runs out of memory, Kubernetes automatically restarts the application.

Docker Features

- Increased Productivity – By simplifying technical set-up and rapid deployment of an application, Docker has also increased productivity. It has not only allowed the execution of an application in an isolated environment, but also decreased the resources required for it.

- Software-defined Networking – Docker has software-defined networking, Docker Engine, and Command Line Interface (CLI) enables operators to define isolated networks for containers, without affecting a single router.

- Scalability – With Docker, it becomes simpler and easier to associate the containers together to build your application, making the scalability of the components easy.

- Flexibility and Modularity – With Docker, running and monitoring containerized applications is more powerful and flexible and it lets you easily break down your application’s functionality into individual containers.

- Application Isolation – Docker offers containers that enable applications to execute in an isolated environment. Every container is independent, which allows developers to run any type of application.

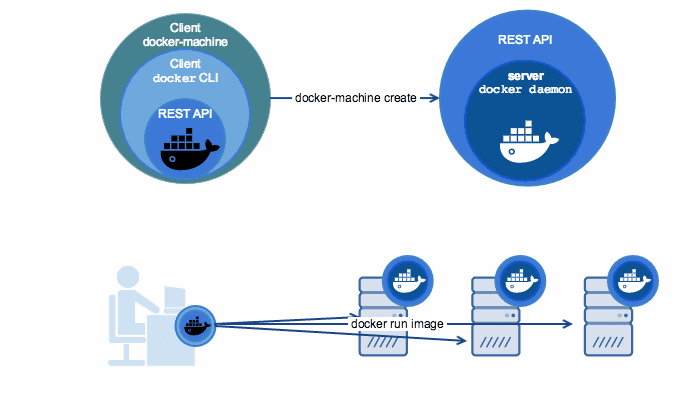

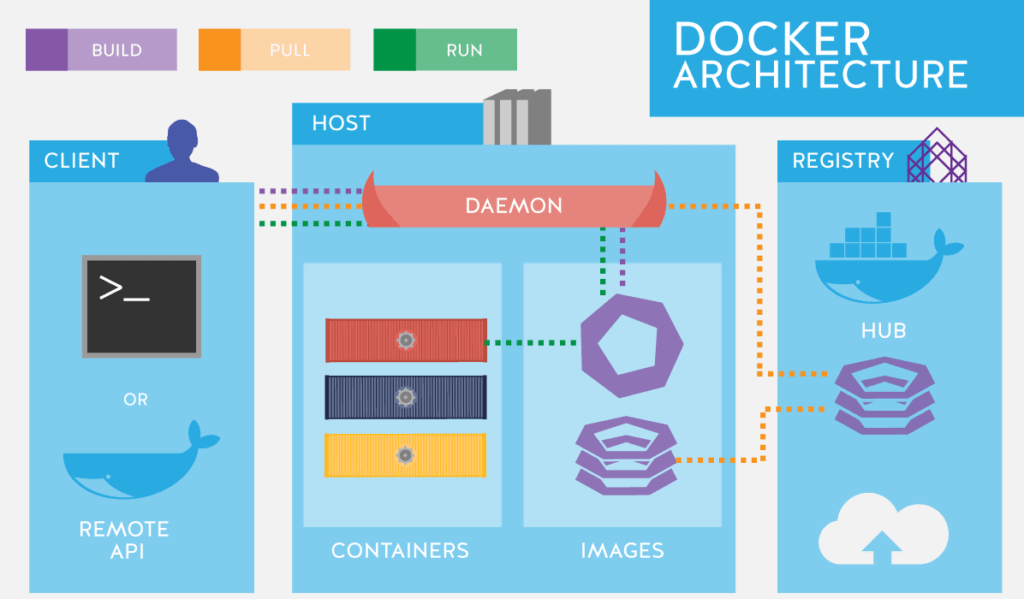

Docker Architecture: How Docker Works

Docker architecture is based on the client-server model and to understand how Docker works to create containerized applications, let’s take a closer look at its architecture diagram:

Docker Architecture Diagram

As you can see in the diagram above, Docker Client communicates with Docker Daemon which is responsible for creating, executing, and distributing the Docker containers. Docker client and Daemon can be executed on the same host and they communicate via REST API to interact with Docker Engine, over a network interface. Now, let’s dive a little deeper into the architecture:

Docker Client

Docker Client enables users to interact with Docker. It provides a CLI to build, run, or end applications, and communicates your instructions to Docker Daemon. It main purpose is to offer a means to directly pull the images from the registry and ensure it is executed on the host.

Docker Host

Docker Host offers an ideal environment to run applications. It comprises of Docker Daemon and components like images, containers, storage, and networking.

Docker Daemon

Docker Daemon manages all container-related operations, receives commands via CLI or REST API, and executes those commands sent to Docker Client (responsible for building, running, and distributing containers). Also, it communicates with other daemons to manages its services.

Docker Components

Various components are used to build and assemble your application. Below is a brief overview of the main Docker components:

Docker Images

Docker Images are read-only binary templates that are built from a set of instructions written in Dockerfile. These images are a source code that developers use to build Docker Containers by using the run command. Also, images hold metadata that describes container requirements and capabilities. Whenever we add/change an instruction in a Dockerfile, it creates a layer; with it, the images become lighter, faster, and smaller as compared to other virtualization technologies.

Docker Containers

Docker Container is a running instance of a Docker Image as they comprise of the entire package required to run an application. It is defined by the image and any further configuration options provided while initiating a container. You can link a container to multiple networks, attach storage to it, or create a new image based on its current condition.

Docker Storage

Docker lets you store your data within the writable container layer, but a storage driver is needed. In the case of non-persistent storage, the data perishes when the container is not running, whereas for persistent data, the following four options are available:

- Data Volumes – With Data Volumes, persistent storage can be created. Moreover, it gives the ability to rename volumes or list volumes and containers associated with the volume in question.

- Data Volume Container – A dedicated container that hosts the volume and that can be mounted to other containers. The volume container is free from the application container and can be shared across multiple containers.

- Directory Mounts – When it comes to Directory Mounts, any directory on the host can be used as a source for the volume.

- Storage Plugins – Storage plugins enable the connection to external storage platforms. These plugins assign storage from the host to an external source.

Docker Networking

Docker offers various networking options for containers to communicate with each other via a host machine. There are two types of networks for Docker containers: default Docker networks and user-defined networks. You get default networks when you install Docker while user-defined networks are the following:

- Bridge – Unlike the default bridge network, a user-defined bridge network requires no port forwarding for containers inside the network to communicate with each other and fully supports automatic network discovery.

- Overlay – This network is used when you need containers for separate hosts to communicate. But a swarm must be enabled for a cluster of Docker Engines.

- Macvlan – While using bridge and overlay networks, a bridge is formed between the host and the container. With Macvlan network, this bridge is removed, providing a benefit of exposing container resources to the outside networks without port forwarding.

Docker Registry

Docker Registry is a repository of images from which you can run your Docker environment. There are public and private registries. Docker Cloud and Docker Club are public registries where multiple users collaborate to build applications. Docker is configured to access images on Docker Hub and if you make use of Docker Datacenter (DDC), it includes Docker Trusted Registry (DTR).

How Does It Work?

If you use Docker pull or run commands, the required images are pulled from your configured registry. And if you use Docker push command, your image is pushed to your configured registry.

Kubernetes Architecture: How Kubernetes Works

In this section, we will take a closer look at different concepts that will help you understand how Kubernetes works:

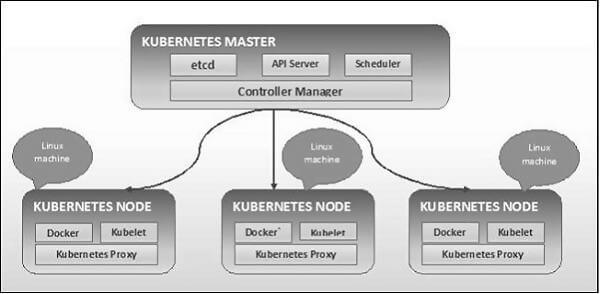

Kubernetes Architecture Diagram

As seen in the Kubernetes diagram above, Kubernetes follows a client-server architecture. There is a single master server acting as a controlling node, comprising of different components that are responsible for different actions. Let’s discuss the components of Kubernetes Master in detail:

etcd

etcd is a simple key-value storage which stores the Kubernetes cluster states, like the number of pods, their state, namespace, etc. Besides, it is used for storing configuration details, including Secrets, subnets, ConfigMaps, etc., which can be used by each of the nodes in the cluster.

API Server

API Server is a key component that provides internal and external interfaces to Kubernetes. The server validates and processes all REST requests and updates the state of the API objects in etcd, enabling clients to configure workloads and containers across Worker nodes.

Controller Manager

Controller Manager is a non-terminating loop that takes actual cluster state towards the desired cluster state, communicating with the API server to create, update, and remove the resources it manages. It is a process of managing different kinds of controllers, including replication, namespace, service account, and endpoint. Moreover, it is responsible for performing lifecycle operations including namespace creation and lifecycle, cascading-deletion garbage collection, event garbage collection, etc.

Scheduler

Being the key component of Kubernetes’ master, the Scheduler is responsible for the distribution, tracking of workload on cluster nodes, allocation of pods on available nodes, and accepting the workload. It also stores resource usage information for each node and accounts for the quality of service requirements, affinity/anti-affinity, data locality, etc.

Kubernetes – Node Components

Below are the main components of node server that are important for communication with Kubernetes Master:

Docker

Docker is the main component which helps in running encapsulated application containers in a lightweight operating environment.

Kubelet

Kubelet is responsible for monitoring the state of a pod and ensuring that every container on the node is operating properly. This agent communicates with the Master node and executes on the nodes. It gets Pod specifications via API server, executes the containers as well as ensures they are actively running.

Kube-proxy

It acts as a load balancer and network proxy for a service on the worker node and handles the network routing for UDP and TCP packets. Kube-proxy supports service abstraction together with other networking functions.

Kubernetes Container Runtime

Container runtime is used to initiate and manage containers and control container images on nodes. It is the component on each node that runs the containers defined in the workloads submitted to the cluster.

Kubernetes vs Docker Use Cases

Docker is a good option for developers to build applications in isolated environments and run them in sandbox environments. However, if you wish to run a large number of containers in the production environment, you may face some complications. For instance, some containers can easily get overloaded. You can manually restart the container, but it can take a lot of time and effort.

Conversely, Kubernetes provides important features like load balancing, high availability, etc., which makes it the most suitable option for highly loaded production environments containing several Docker containers. Still, installing a Docker application is easier than a Kubernetes app, making Docker the best choice for development and testing.

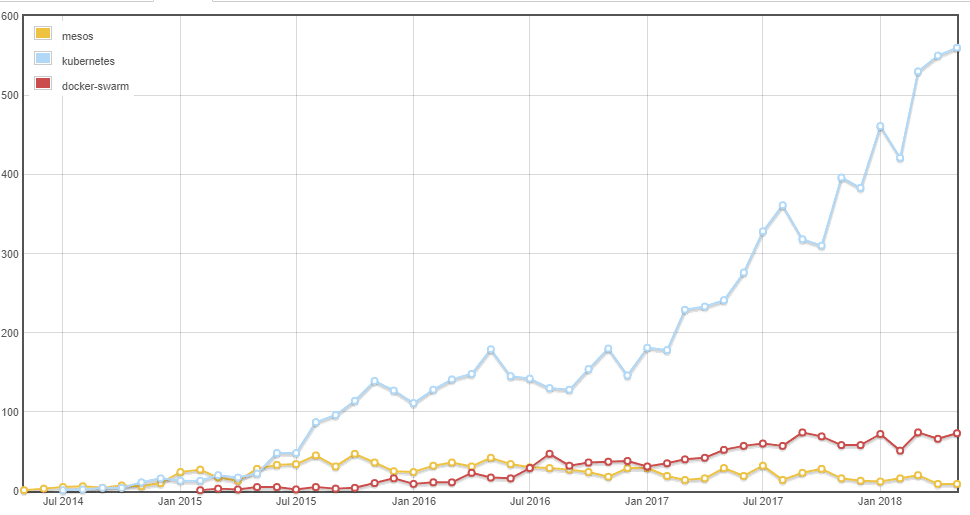

Kubernetes vs Docker Statistics

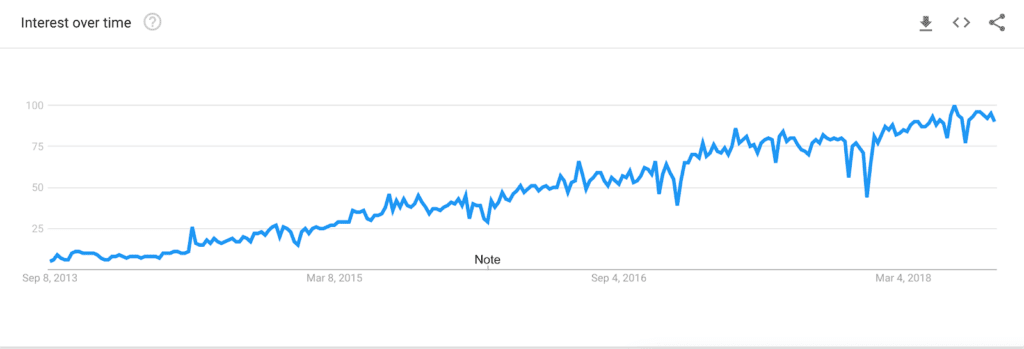

Kubernetes is the fastest growing technology in history of open-source software. After compiling data from questions asked on StackExchange and GitHub for Kubernetes vs Docker, here is the outcome:

Since its introduction in 2013, Google searches for Docker have seen smooth, sustainable growth. The recent Microsoft announcement where the company voiced their support for Docker in both Windows 10 and Windows Server 2016 has raised its popularity. Docker has been established as the standard for orchestration. Below is the Google Trends graph of searches for Docker over the last five years:

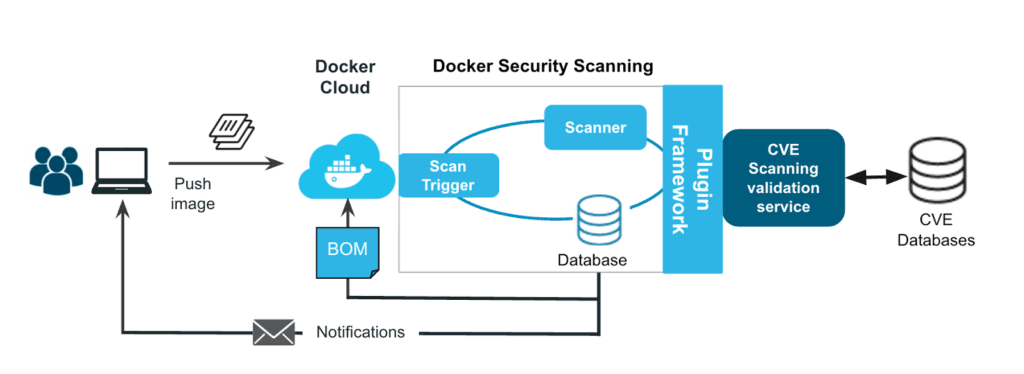

Docker Security

Since the introduction of Docker, 20% of all hosts across environments use Docker. Technologies with such a rapidly growing adoption rate invite a high volume of malicious attacks, leading to robust security improvements.

Here are the three most pertinent security threats to your Docker deployments:

- Stolen Sensitive Secrets – There’s a chance API keys and passwords for critical infrastructure get stolen if they are not encrypted.

- Poisoned Docker Images – Docker images obtained from untrusted sources can be malware-infected.

- Breaking out of a Container – If a Docker is misconfigured with weak binaries, an attacker could break out of a container to access the host.

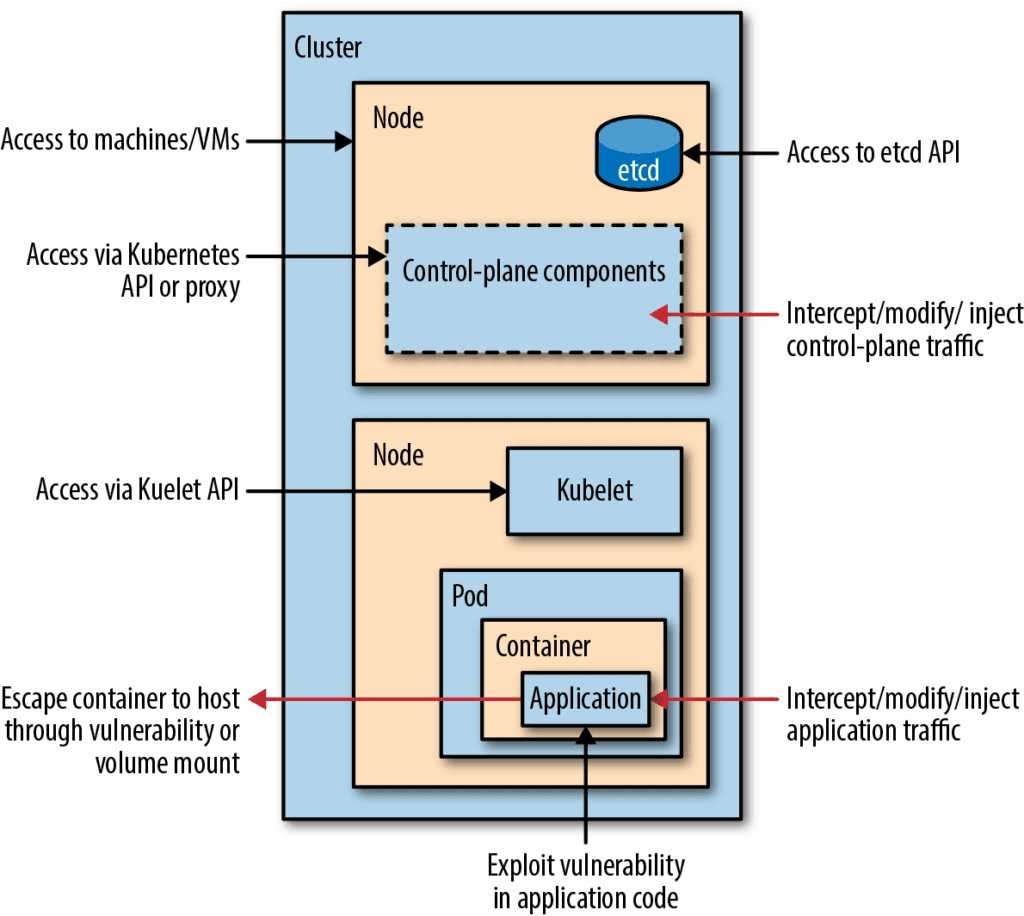

Kubernetes Security

Over the last couple of years, Kubernetes has exploded onto the technological scene with many major companies adopting it. This makes them ask questions on how to best secure their Kubernetes deployments and which areas need more focus to reduce the risk of attacks.

First, you should consider who might attack your system — malicious users or external attackers. Then, secure management interfaces from unauthorized access outside the cluster and save the container environment. As Kubernetes clusters come with many security mechanisms, they minimize the risk of major security threats.

Docker Swarm vs Kubernetes

When comparing Kubernetes vs Docker Swarm, we see that both orchestration tools offer similar functionality, but differ when it comes to operation. Kubernetes is an open-source system which runs containers on production using an internal cluster management system known as Borg. On the other hand, the Docker Swarm comprises of Docker Engine deployed on multiple nodes. The manager nodes perform orchestration management while worker nodes execute tasks.

Docker Compose vs Kubernetes

If we compare Kubernetes vs. Docker Compose, we see that Kubernetes is almost everybody’s favorite. It is an open-source system that orchestrates Docker containers and other microservices as well as scales them. Meanwhile, Docker Compose is a deployment file which has predefined multiple containers with its environment, including networking, volumes, etc. So, it is Kubernetes which is recommended by most of the users when it comes to Docker Compose vs Kubernetes.

Difference Between Docker and Kubernetes

Though both tools are quite similar in functionality, there are some stark differences when it comes to Docker vs Kubernetes that need to be discussed before you make a decision:

| Docker | Kubernetes | |

| Set-up and Installation | Docker can be installed with a one-line command on the Linux Platform. To install a single-node Docker Swarm, you can deploy Docker for different platforms. | Its installation is complicated as it requires manual steps to setup Kubernetes Master. |

| Logging and Monitoring | Docker includes various logging mechanisms which give you information from the process running containers and services and uses third-party tools for monitoring. | Kubernetes has no native storage solution to log data. However, you can integrate different logging solutions into your Kubernetes cluster. For monitoring, many open source tools are available, like Grafana, InfluxDB, etc. |

| Size | Docker Swarm has been scaled, its performance tested on up to 30000 containers. | According to the official Kubernetes documentation, it supports clusters with up to 5000 nodes based on the following criteria:

– No more than 5000 nodes – No more than 150000 total pods – No more than 300000 total containers |

| Architecture | Docker uses a native clustering solution, a cluster of Docker hosts on which you can deploy services. It has a simple architecture that clusters together different Docker hosts and works with the standard Docker API. | Kubernetes is an orchestration tool based on a client-server model and uses custom plugins to extend its functionality. |

| Load Balancing | It supports auto load balancing of traffic between containers in the cluster. | Manual intervention is required for load balancing of traffic between multiple containers in multiple Pods. |

| Scalability | Docker supports service-oriented and microservices architecture where an application is represented by a collection of interconnected containers. As a result, scaling and debugging your applications is easier, and so is the deployment | Highly scalable; performs auto-scaling as well. |

| Data Volumes | The storage volumes can be shared with any container. | The storage volumes can only be shared with other containers in the same Pod. |

Kubernetes vs Docker: Which is Better?

Docker vs Kubernetes: Both are considered as top-of-the-line solutions that are continuously improved. Docker offers a simple solution that is quick to get started with, while Kubernetes supports complex, highly demanding tasks. By accounting for the features of both in our Docker vs. Kubernetes comparison above, you can choose the right tool for your container orchestration.